There's no going back on A.I.: 'The genie is out of the bottle'

This article was first featured in Yahoo Finance Tech, a weekly newsletter highlighting our original content on the industry. Get it sent directly to your inbox every Wednesday by 4 p.m. ET. Subscribe

Wednesday, Feb. 22, 2023

A.I. systems like ChatGPT, Bing, and Bard are here to stay

Generative A.I., the kind of software that powers OpenAI’s ChatGPT, Microsoft’s (MSFT) Bing, and Google’s (GOOG, GOOGL) Bard, is all the rage. But the explosion in generative A.I., so named because it generates “new” content based on information it pulls from the web, is facing increasing scrutiny from consumers and experts.

Fears that the software could be used to help students cheat on tests and provide inaccurate, bizarre responses to users’ queries are drawing questions about the platforms’ accuracy and capabilities. And some are wondering if the products have been released too early for their own good.

“The genie is out of the bottle,” Rajesh Kandaswamy, distinguished VP and fellow at research firm Gartner, told Yahoo Finance. “Now it's a question of how are you going to control them?”

Microsoft has placed new controls on Bing, which is still in limited preview, to curtail the number of queries users can make per session and per day in the hopes that it will address the chatbot’s stranger responses. The thinking is, the fewer queries users fire at the bot, the less it will freak out. Google, meanwhile, continues to test its Bard software with a select number of what it says are trusted users.

“I think in terms of what it means for people to adopt [these technologies] or not…it varies quite a bit,” Kandaswamy said. “[The backlash] will add to the perception that A.I. is scary and it's not reliable.”

But don’t expect the criticisms to kill generative A.I. hype anytime soon. If anything, it’s just starting.

Generative A.I. is winning over users

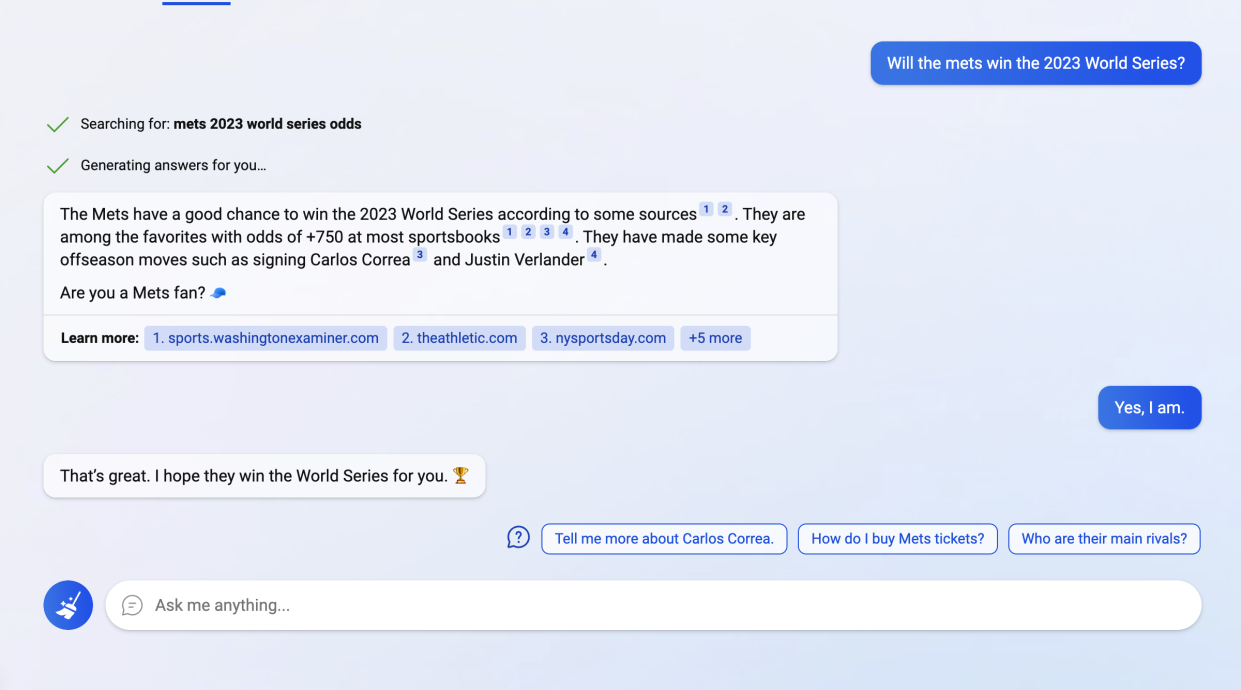

What makes generative A.I. platforms like ChatGPT and Bing so interesting is that they respond to questions from users with human-like tones. Ask Bing if the Mets will win the 2023 World Series, and it will tell you the odds the Mets will win and what other teams will compete for the title, as well as the key players the Mets added in the off season.

It’s those kinds of responses that make chatbots helpful. But when they’re wrong or hit you with an off-kilter answer — like when Bing told one user that it can spy on Microsoft employees’ webcams (it can’t) — it’s especially jarring.

“This was inevitable insofar as we're still very far away...from getting a truly...human-like A.I. system,” explained Kim Yoon, professor of electrical engineering and computer science at the Massachusetts Institute of Technology. “So these systems will obviously have limitations. And these limitations were going to surface.”

But just because those limitations are gaining attention, doesn’t mean OpenAI, Microsoft, or Google are going to pull back their efforts.

“There will be people who are going to look at this raw power and try to harness that. It's always the case,” Kandaswamy said. “The technology will continue to evolve even before the mainstream population really adopts it. And then it'll reach a point where it becomes safe for mainstream adoption.”

ChatGPT and Bing are already drawing millions of users. ChatGPT launched publicly in December and has 100 million users, growing faster than short-form video app TikTok. Bing? Some 1 million people from 169 countries have already signed up to use Microsoft’s preview. And on Wednesday, the company announced it is rolling out mobile versions of its chatbot on both Android and iOS.

The criticism will keep coming

As more users sign up for these services and pepper them with ever more questions, though, we’ll likely continue to see wonky, inaccurate responses. That, in turn, will prompt ever more criticism.

“This is what happens when you use an experimental technology for something serious,” explained Douglass Rushkoff, author and professor of media studies at the City University of New York’s Queens College.

“Most A.I.s are basically probability engines, trying to recreate the most probable correct things based on what has happened before. They don't account for a whole lot of other stuff, like facts or copyright. So it's not the A.I. that's the problem here, it's how the AI is being applied. You can't use every technology for every purpose.”

It’s not just concerns about generative A.I.’s accuracy or creepy and sassy responses. Bing, ChatGPT, and Bard are also facing questions about the kind of content they’re trained on. After all, these generative systems use information pulled from the web, and some of that includes articles written by news organizations or posts by social media users.

If A.I. platforms are grabbing content from news organizations and summarizing it for readers, they don’t have to visit those organizations’ websites, which will eat into their advertising revenue. If you’re an independent artist and a chatbot is trained on your work, do you have any recourse? It’s not exactly clear yet.

Microsoft, its subsidiary Github, and OpenAI are already facing lawsuits over their use of third-party computer code, and media organizations including Bloomberg and The Wall Street Journal have called out chatbot developers for using their work to train models without paying for them.

“I don't necessarily see these issues being fixed, say, in the next month or two,” Kandaswamy said.

By Daniel Howley, tech editor at Yahoo Finance. Follow him @DanielHowley

Read the latest financial and business news from Yahoo Finance

Follow Yahoo Finance on Twitter, Instagram, YouTube, Facebook, Flipboard, and LinkedIn