After her son's suicide, she was left looking for answers. His TikTok gave horrifying clues.

Editor’s note: This story includes graphic descriptions of videos that refer to self-harm. If you or someone you know is in crisis, call 988 to reach the Suicide and Crisis Lifeline. You can also call the network, previously known as the National Suicide Prevention Lifeline, at 800-273-8255, contact the Crisis Text Line by texting HOME to 741741 or visit SpeakingOfSuicide.com/resources.

It had been four months since Jennie DeSerio’s son died by suicide. Wracked by grief and wondering what she didn’t know, she picked up his phone and decided to go through his TikTok account.

What she found horrified her.

Shortly before he died, she found, her 16-year-old, Mason Edens, had liked dozens of graphic videos about breakups, depression and suicide. She knew Mason had recently been through a bad breakup — she didn’t know what he was watching on a platform that he was increasingly engrossed with.

DeSerio said she found at least 15 videos Mason liked that directly promoted suicide, some of which are still on the platform more than a year later. At least five specifically promoted the method he had used.

NBC News reviewed the videos and found that some had accrued tens of thousands of likes. TikTok uses likes as a signal for its “For You” page algorithm, which serves users videos that are supposed to resonate with their interests.

“I completely believe in my heart that Mason would be alive today had he not seen those TikTok videos,” DeSerio said.

She’s now part of a lawsuit with eight other parents against several social media companies over what they say are product defects that led to their children’s deaths. The lawsuit alleges that TikTok targeted Mason with videos that promoted suicide and self-harm. Their suit is one of a group of lawsuits pursuing a novel legal strategy that argues that social media platforms like TikTok are defective and dangerous because they are addictive for young people. Advocates hope that can be a way for people to get justice for harms allegedly caused by social media.

In at least four other active lawsuits brought against TikTok and other social media companies, parents have said TikTok content contributed to their children dying by suicide. In another lawsuit, filed this month, two tribal nations sued TikTok, Meta, Snap and Google alleging that addictive and dangerous designs of social media platforms have led to heightened suicide rates among Native Americans. Google said the allegations are “not true,” and Snap said it was continuing to work on providing resources around teenage mental health.

Suicide is a complex issue, and the Centers for Disease Control and Prevention says it is rarely caused by a single circumstance or event. “Instead, a range of factors — at the individual, relationship, community, and societal levels — can increase risk. These risk factors are situations or problems that can increase the possibility that a person will attempt suicide,” the CDC says on its suicide prevention website.

A TikTok spokesperson said the company couldn’t comment on ongoing litigation but said, “TikTok continues to take industry-leading steps to provide a safe and positive experience for teens,” noting that teen accounts are set to private by default and that teens have an opt-out 60-minute screen time allowance before they’re prompted to enter a passcode.

TikTok has clear policies against content that promotes suicide or actions that could lead to self-harm, but in a sea of billions of videos, some content that glorifies suicide is still slipping through the cracks.

DeSerio said Mason became so hooked on TikTok that he struggled to sleep sometimes, which led to anxiety issues. Friends said he found an emotional outlet on TikTok in particular as he was going through his first heartbreak.

“A 16-year-old boy should never be sent videos like that on TikTok. They’re not going to self-regulate until there’s true accountability,” she said of social media companies.

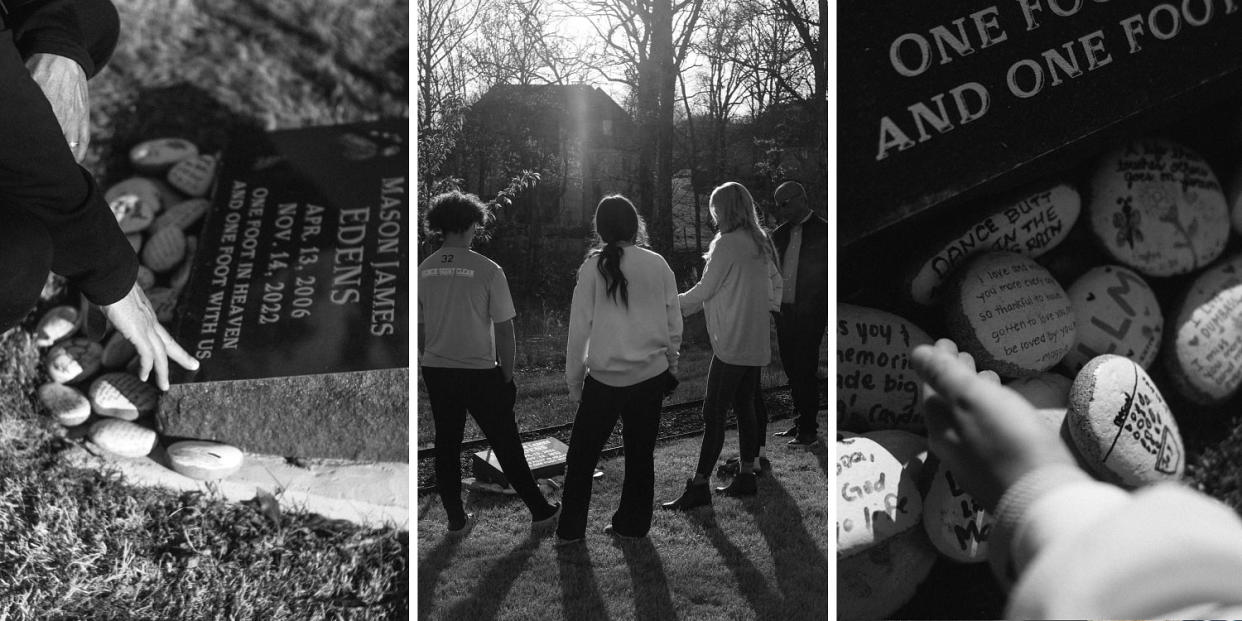

Mason loved sports and the outdoors.

“We were always playing basketball, throwing the football. We were always just outside doing something,” his friend Braxton Cole-Farmer said. “Mason didn’t like just sitting at home doing nothing. If we didn’t have anything to do, we’d just go drive.”

“He was always there for everybody. If anybody just needed a friend to talk to, he wouldn’t judge you based on what’s going on in your life,” he said.

Cole-Farmer said that Mason would retreat into his phone when he was having a tough time but that it wasn’t a cause for concern.

“I mean, all of us are teenagers. We’re all addicted to our phones. So, like, seeing that, we didn’t really catch a red flag on it, because my generation of teenagers are always on their phone.”

In the months before he died, Mason had been in a turbulent relationship that family and friends said ended in a breakup. He was heartbroken, and the fallout rippled through his life at school.

At first, Mason’s parents thought it was normal teenage sorrow. But a few weeks after the breakup, Mason and his mom decided he might need professional help and began actively trying to arrange a therapy visit.

DeSerio said Mason knew the breakup had made his anxiety worse and was taking proactive steps to try to feel better.

“He was showing some anger that he didn’t usually express,” she said.

DeSerio said the family had very open lines of communication, talking about mental health, anxiety and potential treatments in the week leading up to Mason’s death. She said what she heard and saw from him didn’t seem like an emergency.

“There were also a lot of really happy times in those two weeks, too,” she said.

What she didn’t see was what Mason was consuming online — videos that included graphic and detailed depictions and methods of self-harm.

Mason liked one video in which an audio overlay said, “I wanna put a shotgun to my f------ mouth and blow my brains out,” with accompanying text about depression. The audio from that post was eventually removed, but the video remains up. Another described a plan to die by suicide, along with commentary about relationship issues.

One video he liked — it had over 67,000 likes — has text reading “what are your plans for the future?” over slow-motion video of a firearm discharging. That video is no longer available on the platform.

Even though the videos clearly allude to suicide when their elements are taken together, it appears that many of them avoided detection by TikTok’s automated moderation system. According to TikTok, the auto-moderation system is designed to pick up various types of signals that might indicate a community guidelines violation, including keywords, images, titles, descriptions and audio in a video.

TikTok declined to comment on how or why the videos Mason liked evaded its moderation system.

In addition to watching and liking the videos that mentioned suicide, Mason posted TikTok content the day he died about a rapper named Lil Loaded. Lil Loaded gained notoriety on TikTok after he reportedly died by suicide following a breakup.

After he died, Lil Loaded became a frequently cited figure among some communities on TikTok, where dozens of videos that are still on the platform glorified his death, some with over 1 million views. Most of the videos use Lil Loaded’s image or name as shorthand for dying by suicide in reaction to a breakup.

One video that was still on the platform as of early April and had over 100,000 views included an audio clip saying “oh god, why am I even living bro, why do I live?” along with text over a video reading, “bout to pull a lil loaded.”

Mason’s stepbrother, Anthony, 16, said Mason changed his TikTok profile photo the day he died to a photo of Lil Loaded and joked with him before school that he was going to “pull a Lil Loaded.”

Anthony said he asked Mason whether he was suicidal, but Mason said he was just joking around.

That evening, Nov. 14, 2022, DeSerio tried to take Mason’s phone away from him so he could get a good night’s sleep, something she said she regularly did for his mental health. But Mason has just gotten his phone back after having been grounded for fighting at school, DeSerio said. When she tried to take it away from him again, he ran across the room and punched her.

DeSerio said she was shocked. He had never been violent toward her, and it wasn’t like the Mason she knew.

Mason’s mother and stepfather, Dave, took his phone away.

While Jennie and Dave regrouped, Mason, crying and emotional, went to his room and locked the door without their realizing.

When Dave realized that Mason had gone to his room, he ran there and pounded on the door, trying to get him to unlock it.

But Mason was already gone. The 16-year-old died from a self-inflicted gunshot wound.

Social media companies have been immunized from legal responsibility for most content on their platforms by Section 230, a law enacted by the passage of the 1996 Communications Decency Act that says the platforms can’t be treated as publishers of content posted by third parties.

The law has generally insulated social media companies from lawsuits about content on their platforms, but advocates of stricter regulations have recently been pushing to find novel legal strategies to hold tech companies accountable.

DeSerio’s lawsuit and hundreds of others aim to sidestep Section 230 by tying their claims to the legal concept of defective product design.

DeSerio’s suit describes TikTok’s design as manipulative, addictive, harmful and exploitative.

“TikTok targeted Mason with AI driven feed-based tools,” it says. “It collected his private information, without his knowledge or consent, and in manners that far exceeded anything a reasonable consumer would anticipate or allow. It then used such personal data to target him with extreme and deadly subject matters, such as violence, self-harm, and suicide promotion.”

California courts and a federal court are both waiting to begin hearing groups of cases making such arguments, which could open social media companies to a variety of claims around product safety.

Matthew Bergman and his firm, the Social Media Victims Law Center, are representing DeSerio and the other plaintiffs in her case.

“It is our contention that TikTok in particular is an unreasonably dangerous product, because it is addictive to young people,” he said.

Bergman contends that Mason took his own life because of what he viewed on TikTok.

“TikTok, in order to maintain his engagement over a very short period of time, deluged him with videos promoting that he not only take his life, but that he do so” in a specific way, Bergman said.

Content that promotes suicide and self-harm has been a persistent issue for TikTok and other social media platforms for years.

In November, Amnesty International released a research report that found that teens’ accounts on TikTok that expressed interest in mental health quickly went down a rabbit hole of videos about the topic that eventually led to numerous videos “romanticizing, normalizing or encouraging suicide.”

Suicide rates among young people in the U.S. increased 67% from 2007 to 2021, according to the Centers for Disease Control and Prevention. In 2022, suicide rates for young people slightly decreased. Mental health professionals have said the U.S. is in the midst of a teen mental health crisis.

Lisa Dittmer, a researcher at Amnesty International, told NBC News that through interviews with teens, the organization found that “there were times they just weren’t in a capacity to actively counter that impulse to seek out depressive thinking. That would amplify the voice in their heads that said ‘life is all pain and pointless.’”

TikTok criticized Amnesty International’s research in a statement, saying its categorizations of mental health-related videos were overly broad.

The TikTok app immediately presents users with short-form videos, often from the “For You page,” which uses an algorithm that chooses which videos to serve people next. The recommendation system is one of the most powerful features of the platform. It has been repeatedly characterized as addictive by groups like Amnesty International and the Social Media Victims Law Center and as knowing users better than they know themselves. In a document reportedly seen by The New York Times in 2021, TikTok explained that the algorithm was optimized to keep users on the platform for as long as possible and coming back for more, analyzing how every person who uses it interacts with each video. According to the Times report, the equation considers what videos users like, what they comment on and how long they watch certain videos. NBC News hasn’t verified the document.

TikTok has said it has made efforts to try to prevent content rabbit holes, providing tools to enable users to restart their recommendation algorithms and filter out videos including certain words. TikTok also allows parents to oversee teen accounts and further customize screen time and content controls.

But Dittmer said teens who tried using the tools described them as ineffective in their interviews.

Dittmer said young people dealing with mental health issues were susceptible to falling into depressive rabbit holes on TikTok.

“It’s not so much that your average teenager will automatically turn depressive or suicidal from being on TikTok, but for young people who have that thinking in their head, TikTok will just latch on to your interest and your vulnerability and amplify that relentlessly,” she said.

Megan Chesin, a psychology professor at William Paterson University in New Jersey who has studied the connection between media and suicide, said the primary risk of social media for susceptible people is that the content could be encouraging or instructive.

“The risk, of course, is that individuals, like this adolescent that you’re writing about, learn something or are given permission or capability to die by suicide through what they see or understand on social media,” Chesin said. “The more you are exposed to something, the lower your threshold for acting on your own thoughts or desires to die can be.”

At a House of Representatives hearing last May, Rep. Gus Bilirakis, R-Fla., played videos found on TikTok that promoted suicide for TikTok CEO Shou Chew, asking him whether TikTok was fully accountable for its algorithm. Two of the videos included graphic descriptions of suicide via firearms. Chew responded by saying, “We take these issues very seriously, and we do provide resources for anybody that types in something suicide-related.”

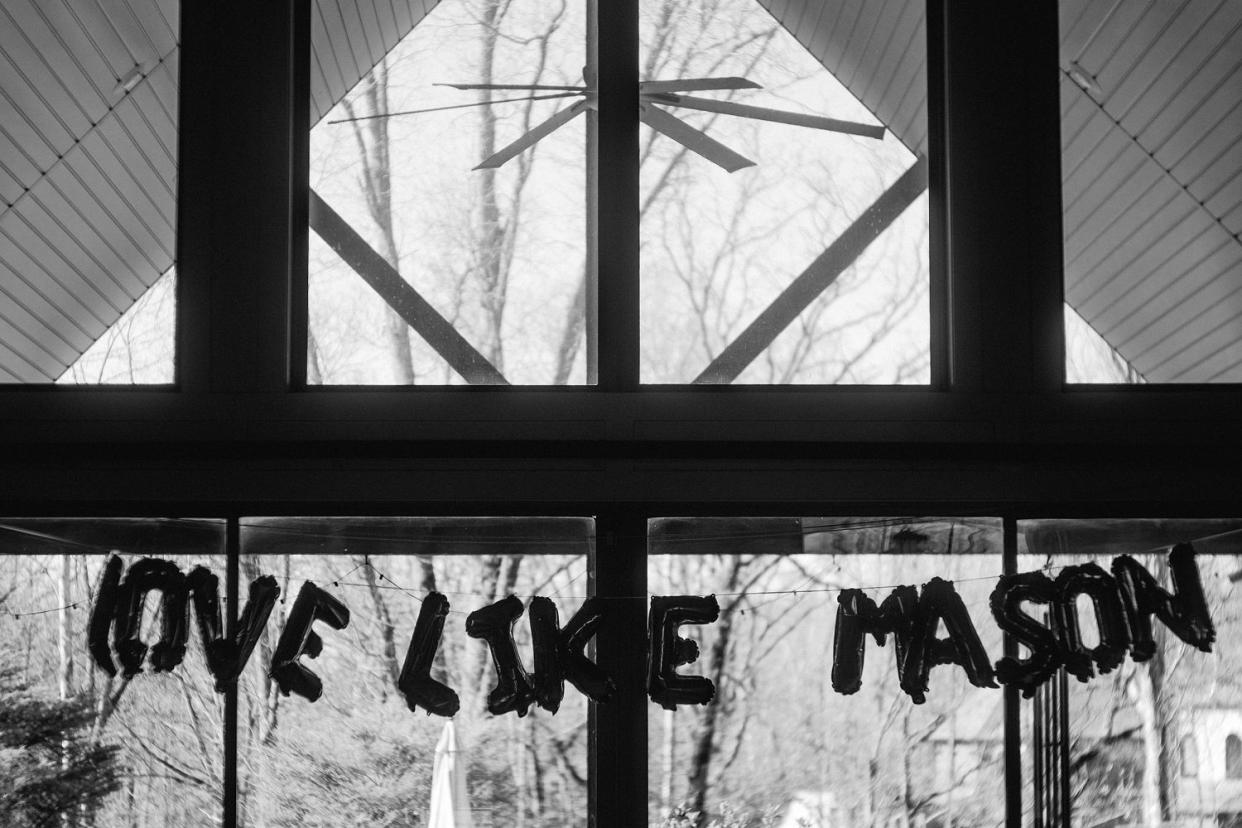

While DeSerio waits for her own story to be heard in court, she has poured her efforts into bringing attention to how social media can affect children and teens.

“Every day I wake up knowing that I need to share the larger message in order to save another child, another mother from this grief,” DeSerio said.

DeSerio agreed to be filmed for a documentary about Mason’s story that is in production, and in January, she and Mason’s stepfather flew to Washington, D.C., to be present as Chew testified in front of the Senate Judiciary Committee along with other tech CEOs about child safety issues and social media.

“It’s standing in front of all of society and challenging the ‘norm.’... Sometimes it’s really scary,” she said.

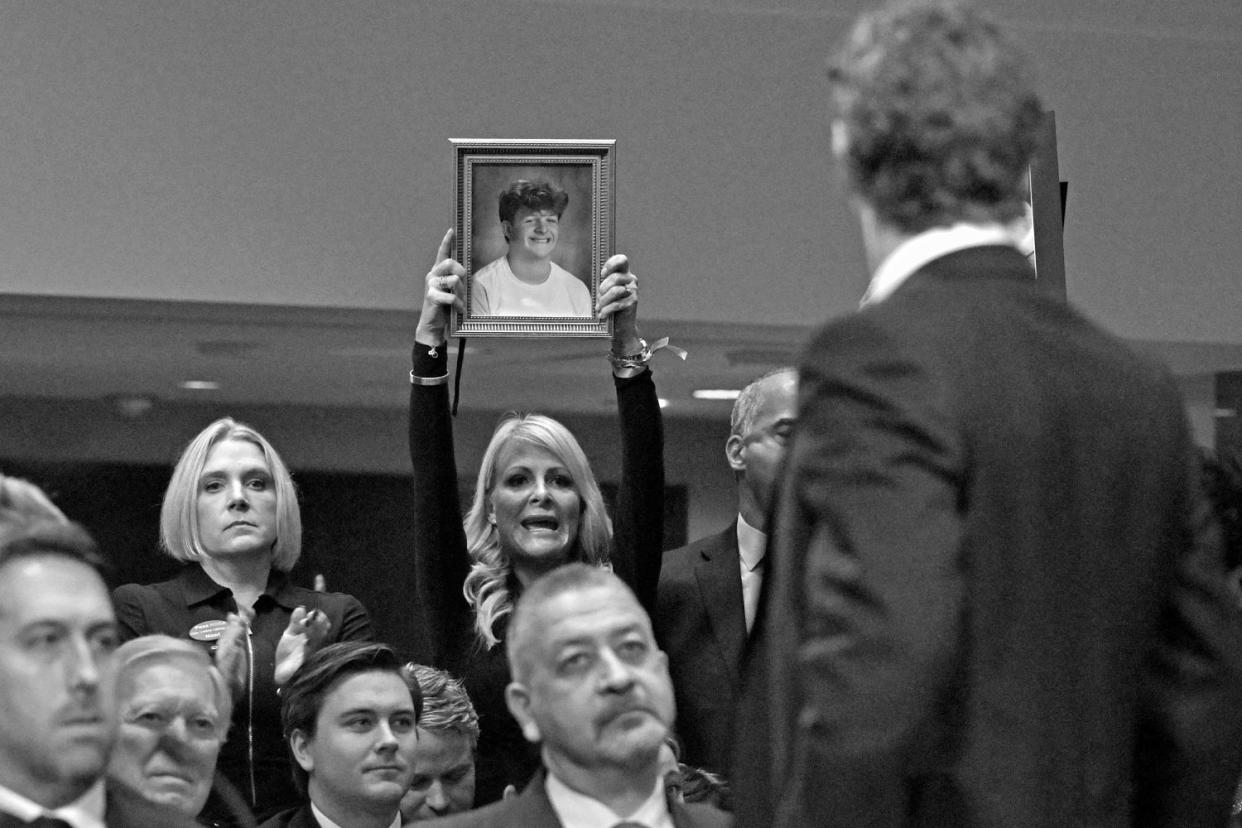

Along with other parents, DeSerio was in the audience, holding a photo of Mason.

As Meta CEO Mark Zuckerberg stood in an unprecedented moment during the hearing, apologizing to parents for their suffering, DeSerio stood, as well, holding Mason’s photo above her head.

The image would be broadcast around the world in photos and videos of the deeply emotional confrontation between one of the world’s most powerful people and the parents who have been trying to get his attention for years.

“I thought my purpose as his mom died that night with him,” DeSerio said. “Little did I know that my purpose just transformed.”