The campaign by US states to curb social media will be tested in 2024

US states are testing a series of new legal ploys and laws designed to erode social media's reach, creating a prime battleground for the tech industry in 2024.

Attorneys general and lawmakers from states across the US spent much of 2023 trying to pressure outfits like TikTok, Facebook, and Instagram with outright bans, legislation regulating their content, or lawsuits alleging the companies violated state consumer protection laws.

States passed at least 65 new tech laws this year targeting child safety, data privacy, artificial intelligence, and content moderation, according to a new report from the University of North Carolina's Center on Technology Policy.

Some of these moves successfully imposed tighter restrictions and scrutiny on the industry, while others were met with setbacks, raising new doubts about whether the experiments will be successful.

The aggressive campaigns by states raise some tricky questions that could be tested before the Supreme Court and numerous other legal forums in 2024.

Should tech firms be allowed to decide what they allow on their platforms without government interference? And can material potentially harmful to children be restricted without also restricting free speech?

But the states may succeed where the federal government has fallen short thus far in efforts to limit the reach of tech firms, said Matt Perault, a UNC professor and director of its Center on Technology Policy, who once worked for Facebook.

Perault cited recent defeats handed to federal antitrust regulators suing to stop Microsoft's (MSFT) acquisition of game developer Activision Blizzard and Meta's (META) acquisition of virtual reality app developer Within.

"Novel lawsuits are sexy, but they don't generally make for winning strategies," Perault said. "If you want to change the technology sector, you actually have to win cases or pass new legislation."

Roadblocks

Some of the states' attempts to curb social media ran into roadblocks in 2023.

Earlier this month a federal judge in Montana temporarily blocked a state law from taking effect that banned TikTok within its borders, saying it likely violated the First Amendment.

The law — intended to safeguard consumer data and prevent the Chinese government from using the Chinese-owned company to spy on Americans — was the first of its kind to apply to the general public (many states have separately banned government workers from using the app).

Other state setbacks this year came in the Midwest, the Southeast, and the West Coast.

An Indiana state court judge dismissed two cases alleging TikTok deceived users about the security of personal data.

In Arkansas, a federal judge blocked a new law set to take effect that would have required new users on social networks to prove their age.

In California, a federal judge blocked a new state law requiring special data safeguards for young online users.

One state, Utah, did have some success. Utah's Supreme Court denied TikTok's request to block the state’s consumer protection division from obtaining internal company documents after the attorney general sued the company in October.

Federal protections

Some other cases filed toward the end of the year should get a lot of attention in 2024, including a federal lawsuit from dozens of Republican and Democratic state AGs alleging tech giant Meta violated a federal child protection law and dozens of state laws prohibiting unfair and deceptive business practices.

These states allege that Meta's Facebook and Instagram deceptively presented their social media platforms as safe while at the same time collecting data on minors and using dopamine-manipulating algorithms to exploit the children for profit.

The federal online privacy law Meta violated, according to the state AGs, is the Children’s Online Privacy Protection Act, or COPPA, which went into effect in 2000. The FTC has proposed strengthening the law to limit companies’ ability to monetize children’s data.

COPPA requires companies with commercial websites to post their privacy policies online and provide parents with direct notice of their information practices. Website operators must also obtain consent from a minor user's parent or guardian before collecting a child's personal information.

The lawsuits target the platform's design, rather than specific content, which is a way for the state AGs to tiptoe around another federal law that offers tech companies greater protection against liability: the Communications Decency Act.

Signed into law in 1996 as public access to the internet proliferated, the CDA enabled online platforms to make “good faith” moderation efforts to remove “objectionable” content.

A key portion of the law — a controversial provision known as Section 230 — is an exemption that permits the platforms to freely moderate third-party content. That immunity makes them distinct from publishers, which can be sued for harmful content they produce.

For decades, the law has insulated platforms like Facebook, Instagram, YouTube (GOOG, GOOGL), Twitter, and TikTok from bearing legal and therefore financial risks for harm allegedly caused by their users' posts.

Its sweeping immunity shielded TikTok from liability in the death of a 10-year-old Pennsylvania girl who died while attempting a high-risk "blackout challenge" that she learned to perform from a viral video that the company's algorithm placed into her platform feed.

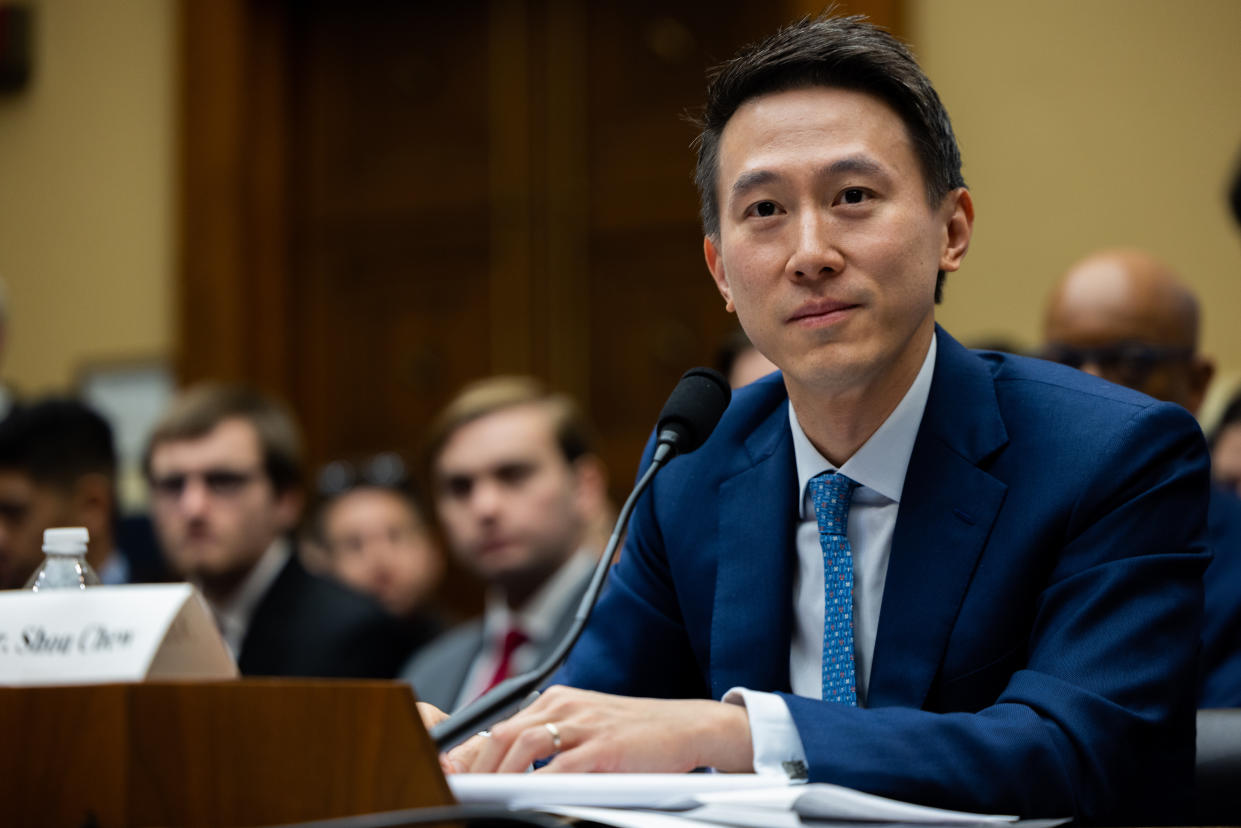

"Section 230 was never intended to shield companies like yours from amplifying dangerous and life-threatening content to children," Rep. Bob Latta told TikTok CEO Shou Chew during a hearing before the House Energy and Commerce Committee in March.

It's 'up to the Supreme Court'

The Supreme Court has already wrestled with a pivotal case testing the strength of Section 230.

In June the high court heard arguments from Alphabet's Google, Twitter, and Facebook, and decided a pair of cases in their favor, holding that the law protected all of the tech titans from liability for moderation policies alleged to have “aided and abetted” terrorist attacks.

The cases were brought by the estates of families whose loved ones were killed in terrorist actions promoted on the platforms.

In a win for the tech defendants, the court left Section 230 unchanged.

The Supreme Court will get another chance to rule on this legal question in 2024. It is expected to consider cases that challenge laws in Florida and Texas that restrict major social media companies from moderating user posts that express certain viewpoints, including political views.

An appellate court upheld the Texas law, and a separate appellate court blocked Florida legislation.

Other states may be waiting to see how the high court rules before bringing more legal cases or proposing new laws challenging how tech firms moderate their content.

Legislation on that topic showed a significant drop in 2023, according to UNC's report.

Protecting child safety and personal data online was a much more popular route. This year, state lawmakers in 13 states passed 23 new bills on child safety, and state lawmakers in 16 states passed 23 new bills on data privacy.

Only one state law protecting child safety was passed in 2022, though some states did include child safety regulations in comprehensive legislation. In 2022, two states passed comprehensive privacy legislation.

"We saw major action in child safety [laws]," Scott Babwah Brennen, head of online expression policy at UNC's Center on Technology Policy, told Yahoo Finance. "The headline for us in the report as it concerns content moderation was actually how little we saw."

Content moderation, Babwah Brennen said, "is all up to the Supreme Court."

Alexis Keenan is a legal reporter for Yahoo Finance. Follow Alexis on Twitter @alexiskweed.

Click here for the latest technology news that will impact the stock market.

Read the latest financial and business news from Yahoo Finance