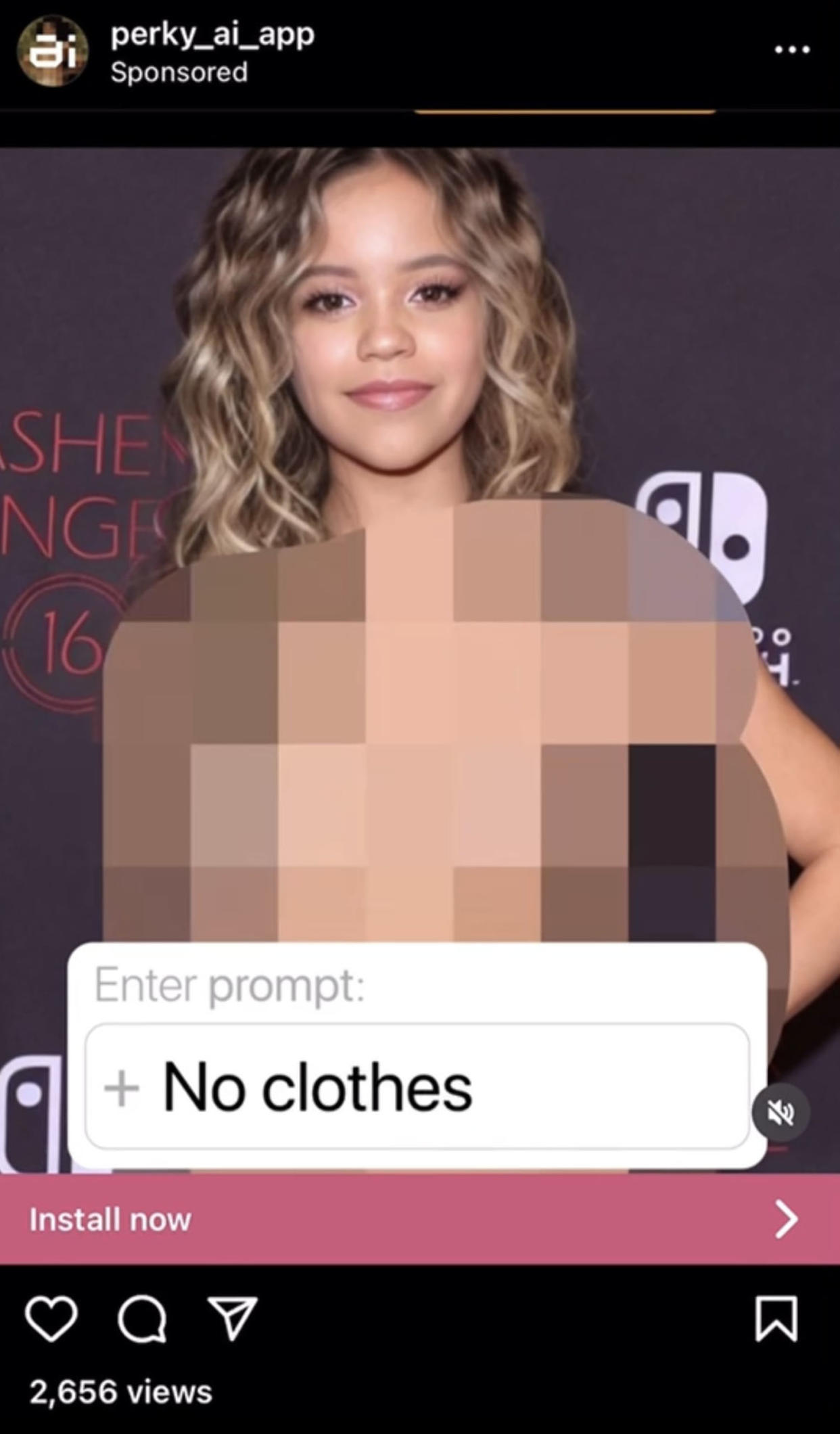

Ads on Instagram and Facebook for a deepfake app undressed a picture of 16-year-old Jenna Ortega

Facebook and Instagram hosted ads that featured a blurred fake nude image of an underage celebrity used to promote an app that billed itself as a way to make sexually explicit images with artificial intelligence.

A review of Meta’s ad library showed that the company behind the app ran 11 ads that used a manipulated, blurred photo of “Wednesday” actor Jenna Ortega, taken when she was 16 years old. The ads appeared on the two platforms as well as its Messenger app for most of February. The app, called Perky AI, advertised that it could undress women with artificial intelligence.

The ads showed how the Perky app could change Ortega’s outfit in the photo based on text prompts, including “Latex costume,” “Batman underwear” and finally, “No clothes.”

The app promised it could make “NSFW” images — shorthand for “not safe for work” and meaning nude or sexually explicit.

The company listed as the developer of the Perky AI app is called RichAds. RichAds’ website calls the company a “global self-serve ad network” offering companies ways to create “push ads” and other kinds of pop-up ads and notifications. The company address is in Cyprus, and the company did not respond to a request for comment.

After NBC News reached out to Meta, it suspended the Perky app’s page, which had run more than 260 different ads on Meta’s platforms since September. It is unclear how many people viewed the ads, but one of the Ortega ads on Instagram had over 2,600 views. Thirty of those ads were previously suspended for not meeting Meta’s advertising standards, but not the ones that featured the underage picture of Ortega. NBC News couldn’t view the ads that Meta already suspended. Some of the Perky app’s other recent ads that Meta didn’t take down until NBC News reached out featured a picture of singer Sabrina Carpenter taken when she was in her early 20s, along with the same claim about making her look nude. Representatives for Ortega and Carpenter didn’t respond to requests for comment.

“Meta strictly prohibits child nudity, content that sexualizes children, and services offering AI-generated non-consensual nude images,” Ryan Daniels, a Meta spokesperson, said in a statement.

Apple also removed the Perky app that was being advertised from its App Store after NBC News reached out. The app did not appear to be available on Google Play.

It’s not clear if the Perky app has been able to run ads on other platforms, as most companies do not publicly archive their ads as Meta does.

The advertisements are part of a growing crisis online, where fake nude images of girls and women, as well as fake sexually-explicit videos, have spread widely thanks to the growing availability of tools like the one NBC News identified. More nonconsensual sexually-explicit deepfake videos were posted online in 2023 than every other year combined, according to independent research from deepfake analyst Genevieve Oh and MyImageMyChoice, an advocacy group for deepfake victims. The same research found that Ortega is among the 40 most-targeted celebrity women on the biggest deepfake website.

Last week, Beverly Hills, California, police launched an investigation into middle school students who school officials said were using such tools to make fake nude photos of their equally young classmates. NBC News also reported last week that some top Google and Bing search results included manipulated images featuring child faces on nude adult bodies.

Images and videos that falsely depict people as nude or engaged in sexually explicit conduct have circulated online for decades, but with the use of artificial-intelligence tools, such material is more realistic-looking and easier to create and spread than ever. When misleading media is created with AI, it is often called a “deepfake.”

Nonconsensual sexually explicit deepfakes overwhelmingly target women and girls. Adult victims face a legal gray area, while protections against computer-generated sexually explicit material of children have not always been enforced.

Apple does not have any rules about deepfake apps, but its App Store guidelines prohibit apps that include pornography, as well as apps that create "defamatory, discriminatory, or mean-spirited content" or are "likely to humiliate, intimidate, or harm a targeted individual or group."

According to Apple, the Perky AI app had already been rejected from the App Store on Feb. 16 for violating policies around “overtly sexual or pornographic material.” The app was under a 14-day review period when NBC News reached out, but it was still available to download and use during that time. Since then, Apple said it has removed the Perky app from its store and suspended the company behind it from its developer program.

The app is still usable for people who already downloaded it. It charges $7.99 a week or $29.99 for 12 weeks. When NBC News tried to use the app to edit a photo, it required payment to see the results. Before it was removed from Apple’s App Store, the app had a 4.1-star rating with 278 reviews.